Research Themes

Analysis of Gaze-Gait Relations

We analyzed changes in human gait (way of walking) that corresponded to changes in human gaze direction. As preliminary analysis of gaze-gait relations, we focused on arm and leg swing amplitudes as a measure of gait and analyzed the relationship between gaze and arm/leg swings. We observed a tendency for decreased swing amplitude in the arm further from the gazing direction, and for increased swing amplitude in the arm closer to the gaze direction. Our results suggest that it may be possible to estimate gaze from human gait.

- Analysis of head and chest movements that correspond to gaze directions during walking

H.Yamazoe, I.Mitsugami, T.Okada, Y.Yagi

Experimental Brain Research, To appear, 2019

- Immersive Walking Environment for Analyzing Gaze-gait Relations

H.Yamazoe, I.Mitsugami, T.Okada, T.Echigo, Y.Yagi

Transactions of the Virtual Reality Society of Japan, Vol.22, No.3, 2017

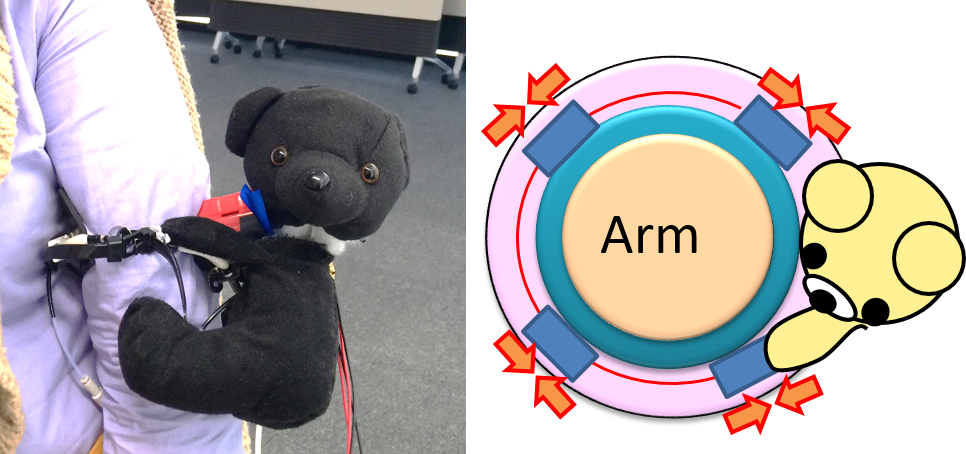

Direction indication by pulling user's cloth for wearable robot

We propose a tactile expression mechanism that can make physical contact and provide direction indications. We previously proposed a wearable robot that can provide physical contact for elderly support in outdoor situations. In our current scheme, wearable message robots, which we mounted on the user's upper arm, give such messages to users as navigational information, for example. Using physical contact can improve relationships between users and robots. However, our previous prototypes have a problem because the types of tactile expressions (that the robots can make) are limited. Thus, we propose a tactile expression mechanism using a pneumatic actuator array for wearable robots. Our proposed system consists of four pneumatic actuators and creates such haptic stimuli as direction indications as well as stroking a user's arm. Our wearable robots were originally designed as appropriate support and communication for two types of physical contact: notification and affection. Our proposed mechanism for physical contact and direction indications naturally extends not only notification but also the affection abilities of the robot. Our robots and our proposed mechanism are expected to support the mobility of senior citizens by reducing their anxiety on outings.

- A tactile expression mechanism using pneumatic actuator array for notification from wearable robots

H.Yamazoe, T.Yonezawa, HCI International 2017, 2017

- Direction indication mechanism by tugging on user’s clothing for a wearable message robot

H.Yamazoe, T.Yonezawa, ICAT-EGVE2015, 2015

Depth sensor calibration and its compensation

This research proposed a depth measurement error model of consumer depth cameras such as Microsoft KINECT, and its calibration method. These devices are originally designed for video game interface, thus, the obtained depth map are not enough accurate for 3D measurement. To decrease these depth errors, several models have been proposed, however, these models consider only camera-related parameters. Since the depth sensors are based on projector-camera systems, we should consider projector-related parameters. Therefore, we propose the error model of the consumer depth cameras especially the KINECT, considering both intrinsic parameters of the camera and the projector. To calibrate the error model, we also propose the parameter estimation method by only showing a planar board to the depth sensors. Our error model and its calibration are necessary step for using the KINECT as a 3D measuring device. Experimental results show the validity and effectiveness of the error model and its calibration.

- Depth Error Correction for Projector-Camera Based Consumer Depth Camera

H. Yamazoe, H. Habe, I. Mitsugami, Y. Yagi, Computational Visual Media, to appear, 2018

- Easy Depth Sensor Calibration

H. Yamazoe, H. Habe, I. Mitsugami, Y. Yagi, ICPR2012, , 2012.

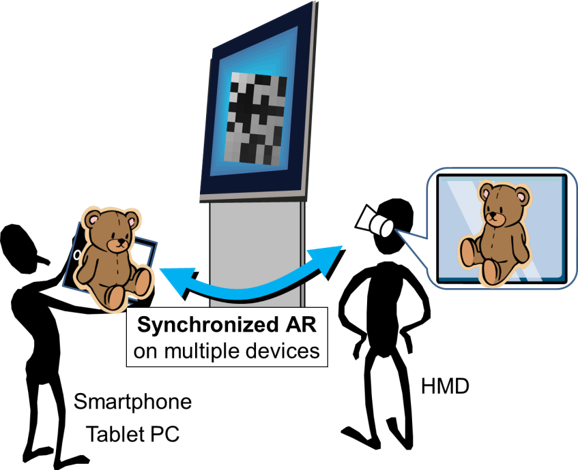

Synchronized AR Environment Using Animation Markers

We proposed a method to achieve positions and poses of multiple cameras and temporal synchronization among them by using blinking calibration patterns. In the proposed method, calibration patterns are shown on tablet PCs or monitors, and are observed by multiple cameras. By observing several frames from the cameras, we can obtain the camera positions, poses and frame correspondences among cameras. The proposed calibration patterns are based on pseudo random volumes (PRV), a 3D extension of pseudo random sequences. Using PRV, we can achieve the proposed method. We believe our method is useful not only for multiple camera systems but also for AR applications for multiple users.

- Synchronized AR Environment for Multiple Users Using Animation Markers

H. Yamazoe, T.Yonezawa, The ACM Symposium on Virtual Reality Software and Technology (VRST2014) , pp.237-238, 2014

- Geometrical and temporal calibration of multiple cameras using blinking calibration patterns

H. Yamazoe, IPSJ Transactions on Computer Vision and Applications, Vol.6 pp.78-82, 2014

Wearable Robot

We proposed a wearable partner agent, that makes physical contacts corresponding to the user’s clothing, posture, and detected contexts. Physical contacts are generated by combining haptic stimuli and anthropomorphic motions of the agent. The agent performs two types of the behaviors: a) it notifies the user of a message by patting the user’s arm and b) it generates emotional expression by strongly enfolding the user’s arm. Our experimental results demonstrated that haptic communication from the agent increases the intelligibility of the agent’s messages and familiar impressions of the agent.

- Wearable partner agent with anthropomorphic physical contact with awareness of user's clothing and posture

T. Yonezawa, H. Yamazoe, ISWC2013, 2013

- Physical Contact using Haptic and Gestural Expressions for Ubiquitous Partner Robot

T. Yonezawa, H. Yamazoe, IROS2013, 2013

Analysis of Gait Changes due to Fixing Knee

We analyzed the gait changes by simulating left knee disorders in subjects. Our aim is a method that estimates the presence or the absence of leg disorders and the disordered parts from the image sequences of a subject's walking. However, gait can be changed not only by physical disorders but also such factors as neural disorders or aging. Thus we simulate the physical disorders using a knee brace. Healthy subjects wore knee braces. We compared the normal and simulated disordered walking (while wearing the knee brace) to analyze what changes occur in their gait due to physical disorders. We also analyzed whether the changes are common to all subjects. Analysis results show that the changes common to all subjects are caused by the simulated left knee disorders.

- Analysis of Gait Changes Caused by Simulated Left Knee Disorder

T. Ogawa, H. Yamazoe, I. Mitsugami, Y. Yagi

9th EAI International Conference on Bio-inspired Information and Communications Technologies (formerly BIONETICS), pp. 57-60, 2015

- The Effect of the Knee Braces on Gait -Toward Leg Disorder Estimation from Images

T. Ogawa, H. Yamazoe, I. Mitsugami, Y. Yagi

the 2nd Joint World Congress of ISPGR and Gait and Mental Function, , 2013

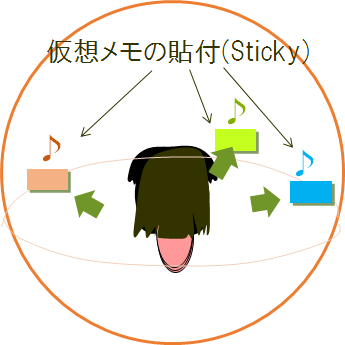

Voisticky/Mixticky:Virtual Multimedia Stickies

We proposed "Voisticky" and "Mixticky", schemes for browsing and recording memorandum-like sticky notes in a three-dimensional virtual space around a user with a smartphone. For intuitive browsing and recall of such memos and information, it is important to achieve browsability and contemporaneousness of the memos/information. Mixticky allows the user to place and peel off memos on a virtual balloon around her/him corresponding to each relative direction (e.g., front, left, or 45 [deg] from front to right). The user can record and browse voice, movie, image, handwriting, and text memos as virtual sticky notes in each direction by pointing the phone. The system employs snapping gestures with the smartphone as metaphors for the “put” and “peel off” motions corresponding to a physical sticky note. The virtual balloon can be carried in various scenarios so that the user can easily resume her or his thought processes anywhere.

- Mixticky: A Smartphone-Based Virtual Environment for Recordable and Browsable Multimedia Stickies

H. Yamazoe, T. Yonezawa, The 2nd Asian Conference on Pattern Recognition (ACPR2013), , 2013

- Voisticky: Sharable and Portable Auditory Balloon with Voice Sticky Posted and Browsed by User's Head Direction

T. Yonezawa, H. Yamazoe, H. Terasawa, IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC 2011), pp.118--123, 2011

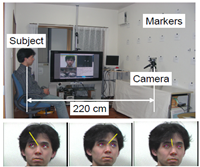

Gaze Estimation without Explicit Calibration Procedures

We proposed a real-time gaze estimation method based on facial-feature tracking using a single video camera that does not require any special user action for calibration. Many gaze estimation methods have been already proposed; however, most conventional gaze tracking algorithms can only be applied to experimental environments due to their complex calibration procedures and lacking of usability. In this paper, we propose a gaze estimation method that can apply to daily-life situations. Gaze directions are determined as 3D vectors connecting both the eyeball and the iris centers. Since the eyeball center and radius cannot be directly observed from images, the geometrical relationship between the eyeball centers and the facial features and eyeball radius (face/eye model) are calculated in advance. Then, the 2D positions of the eyeball centers can be determined by tracking the facial features. While conventional methods require instructing users to perform such special actions as looking at several reference points in the calibration process, the proposed method does not require such special calibration action of users and can be realized by combining 3D eye-model-based gaze estimation and circle-based algorithms for eye-model calibration. Experimental results show that the gaze estimation accuracy of the proposed method is 5 [deg] horizontally and 7 [deg] vertically. With our proposed method, various application such as gaze-communication robots, gaze-based interactive signboards, etc. that require gaze information in daily-life situations are possible.

- 単眼カメラを用いた視線推定のための3次元眼球モデルの自動キャリブレーション

山添大丈, 米澤朋子, 内海章, 安部伸治, 電子情報通信学会論文誌D, Vol.J94-D, No.6, pp.998-1006, 2011

- Remote Gaze Estimation with a Single Camera Based on Facial-Feature Tracking

H. Yamazoe, A. Utsumi, T. Yonezawa, S. Abe

Eye Tracking Research & Applications Symposium (ETRA2008), pp.245--250, 2008

Video Communication Support System for Elderly People

This paper proposes a video communication assist system using a companion robot in coordination with the user's conversational attitude toward the communication. In order to maintain a conversation and to achieve comfortable communication, it is necessary to provide the user's attitude-aware assistance. First, the system estimates the user's conversational state by a machine learning method. Next, the robot appropriately expresses its active listening behaviors, such as nodding and gaze turns, to compensate for the listener's attitude when she/he is not really listening to another user's speech, the robot shows communication-evoking behaviors (topic provision) to compensate for the lack of a topic, and the system switches the camera images to create an illusion of eye-contact, corresponding to the current context of the user's attitude. From empirical studies and a demonstration experiment, i) both the robot's active listening behaviors and the switching of the camera image compensate for the other person's attitude, ii) elderly people prefer long intervals between the robot's behaviors, and iii) the topic provision function is effective for awkward silences.

- Assisting video communication by an intermediating robot system corresponding to each user's attitude

T. Yonezawa, H. Yamazoe, Y. Koyama, S. Abe, K. Mase

ヒューマンインタフェース学会論文誌, Vol.13, No.3, pp.181-193, 2011

- Estimation of user conversational states based on combination of user actions

H. Yamazoe, Y. Koyama, T. Yonezawa, S. Abe, K. Mase, CASEMANS 2011, pp.33--37, 2011

Interactive Guideboard with Gaze-communicative Stuffed-toy Robot

We introduced an interactive guide plate system by adopting a gaze-communicative stuffed-toy robot and a gaze-interactive display board. An attached stuffed-toy robot on the system naturally show anthropomorphic guidance corresponding to the user’s gaze orientation. The guidance is presented through gaze-communicative behaviors of the stuffed-toy robot using joint attention and eye-contact reactions to virtually express its own mind in conjunction with b) vocal guidance and c) projection on the guide plate. We adopted our image-based remote gaze-tracking method to detect the user’s gazing orientation. The results from both empirical studies by subjective / objective evaluations and observations of our demonstration experiments in a semipublic space show i) the total operation of the system, ii) the elicitation of user’s interest by gaze behaviors of the robot, and iii) the effectiveness of the gaze-communicative guide adopting the anthropomorphic robot.

- Attractive, Informative, and Communicative Robot System on Guide Plate as an Attendant with Awareness of User's Gaze

T. Yonezawa, H. Yamazoe, A. Utsumi, S. Abe, Paladyn. Journal of Behavioral Robotics, Vol.4, issue.2, pp.113-122, 2013

- 広視野・高解像度カメラによる単眼・多人数視線推定を利用したインタラクティブロボット看板

山添大丈, 内海章, 米澤朋子, 安部伸治, 画像の認識・理解シンポジウム(MIRU2008), pp.1638-1643, 2008

Head Pose Estimation using Body-mounted Camera

We proposed a body-mounted system to capture user experience as audio/visual information. The proposed system consists of two cameras (head-detection and wide angle) and a microphone. The head-detection camera captures user head motions, while the wide angle color camera captures user frontal view images. An image region approximately corresponding to user view is then synthesized from the wide angle image based on estimated human head motions. The synthesized image and head-motion data are stored in a storage device with audio data. This system overcomes the disadvantages of head-mounted cameras in terms of ease of putting on/taking off the device. It also has less obtrusive visual impact on third persons. Using the proposed system, we can simultaneously record audio data, images in the user field of view, and head gestures (nodding, shaking, etc.) simultaneously. These data contain significant information for recording/analyzing human activities and can be used in wider application domains such as a digital diary or interaction analysis. Experimental results demonstrate the effectiveness of the proposed system.

- A Body-mounted Camera System for Head-pose Estimation and User-view Image Synthesis

H. Yamazoe, A. Utsumi, K. Hosaka, M. Yachida, Image and Vision Computing, Vol.25, No.12, pp.1848-1855, 2007

- A Body-mounted Camera System for Capturing User-view Images without Head-mounted Camera

H. Yamazoe, A. Utsumi, K. Hosaka, ISWC2005, pp 114-121, 2005

Human Tracking using Head-mounted Cameras and Fixed Cameras

We proposed a method to estimate human head positions and poses by using head-mounted cameras and fixed cameras.

- ヘッドマウントカメラ画像と固定カメラ画像を用いた頭部位置・姿勢推定

山添大丈, 内海章, 鉄谷信二, 谷内田正彦, 電子情報通信学会論文誌D, Vol.J89-D, No.1, pp.14-26, 2006

- Vision-based Human Tracking System by using Head-mounted Cameras and Fixed Cameras

H. Yamazoe, A. Utsumi, N. Tetsutani, M. Yachida, ACCV2004, pp.682-687, 2004

Multiple Camera Calibration

We proposed a distributed automatic method of calibrating cameras for multiple-camera-based vision systems. because manual calibration is a difficult and time-consuming task. However, the data size and computational costs of automatic calibration increase when the number of cameras is increased. We solved these problems by employing a distributed algorithm. With our method, each camera estimates its position and orientation through local computation using observations shared with neighboring cameras. We formulated our method with two kinds of geometrical constraints, an essential matrix and homography. We applied the latter formulation to a human tracking system to demonstrate the effectiveness of our method.

- 多視点視覚システムにおけるカメラ位置・姿勢推定の分散化とその人物追跡システムへの適用

山添大丈, 内海章, 鉄谷信二, 谷内田正彦, 映像情報メディア学会誌, Vol.58, No.11, pp.1639-1648, 2004

- Automatic camera calibration method for distributed multiple camera based human tracking system

H. Yamazoe, A. Utsumi, N. Tetsutani, M. Yachida, In Proc. of the 5th Asian Conference on Computer Vision (ACCV2002), pp. 424-429, 2002